How to Build plain old data: A Step-by-Step Guide

Plain Old Data (POD) is the foundation of effective AI systems, but creating it from scratch requires careful planning, execution, and maintenance. Whether you're starting a new project or overhauling an existing dataset, here's a comprehensive guide to building high-quality POD that powers your AI initiatives.

Step 1: Define Your Objectives

Before collecting any data, clearly define the purpose of your AI project. Ask yourself:

- What problem am I trying to solve?

- What kind of insights or predictions do I need?

- What type of data will best serve this purpose?

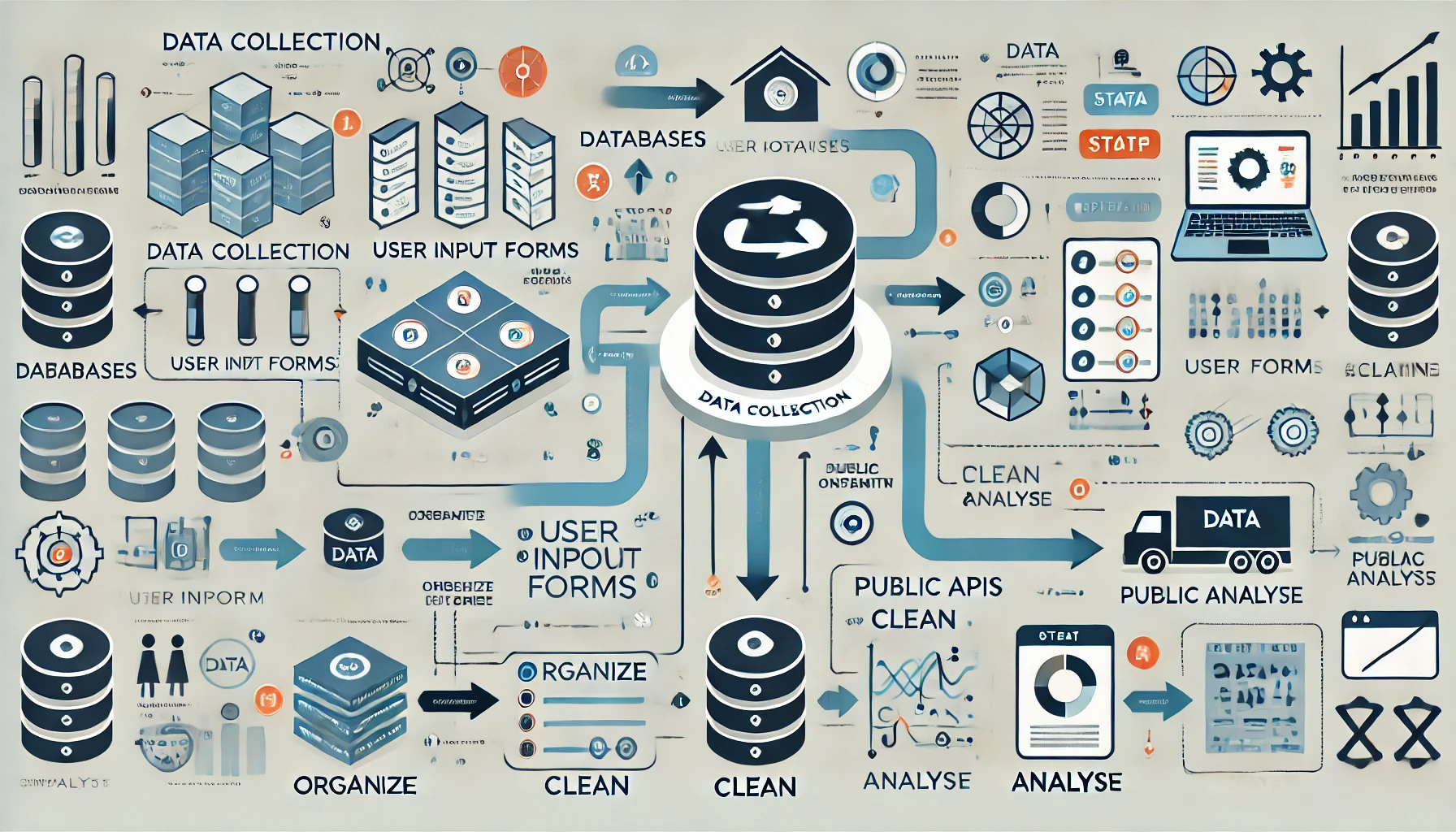

Step 2: Identify Data Sources

Once you know what data you need, identify where it will come from. Potential sources include:

- Internal Systems: Databases, CRM tools, transaction logs, or IoT devices.

- Public Datasets: Open data repositories like Kaggle, government databases, or academic datasets.

- Third-Party Providers: Data vendors or APIs that offer specialized datasets.

- User Input: Surveys, feedback forms, or user-generated content.

Step 3: Design a Data Collection Plan

Create a structured plan for collecting data. This includes:

- Data Types: Decide on the types of data you need (e.g., numerical, categorical, text, images).

- Data Format: Choose a consistent format (e.g., CSV, JSON, SQL) for easy processing.

- Frequency: Determine how often data will be collected (e.g., real-time, daily, weekly).

- Volume: Estimate the amount of data required to train your AI model effectively.

Step 4: Collect and Organize Data

Start gathering data according to your plan. Use tools like web scrapers, APIs, or data entry forms to streamline the process. As you collect data, organize it into a structured format:

- Use tables for tabular data (e.g., spreadsheets or databases).

- Store text data in plain text files or JSON format.

- Save images or videos in standardized formats (e.g., JPEG, PNG, MP4).

Step 5: Clean and Preprocess the Data

Raw data is often messy and unusable. Clean and preprocess it to create high-quality POD:

- Remove Duplicates: Eliminate redundant entries to avoid skewing your dataset.

- Handle Missing Values: Fill in gaps using interpolation, averages, or other methods, or remove incomplete records.

- Standardize Formats: Ensure consistency in units, date formats, and categorical labels.

- Remove Outliers: Identify and address anomalies that could distort your AI model's performance.

- Normalize Data: Scale numerical data to a standard range (e.g., 0 to 1) for better model training.

Step 6: Validate Data Quality

Before using your dataset, validate its quality:

- Accuracy: Ensure the data is correct and free from errors.

- Completeness: Verify that all necessary fields are populated.

- Consistency: Check for uniformity across records.

- Relevance: Confirm that the data aligns with your objectives.

Step 7: Store and Manage Data

Choose a secure and scalable storage solution for your POD:

- Databases: Use SQL or NoSQL databases for structured data.

- Cloud Storage: Leverage cloud platforms like AWS, Google Cloud, or Azure for scalability.

- Data Lakes: Store large volumes of raw data for future processing.

Step 8: Continuously Update and Maintain

Data is not static - it evolves over time. Regularly update your dataset to reflect new information and maintain its relevance:

- Add new records as they become available.

- Remove outdated or irrelevant data.

- Revisit your data cleaning and preprocessing steps periodically.

Step 9: Document Your Dataset

Create detailed documentation to make your dataset easy to understand and use:

- Metadata: Describe the structure, fields, and sources of your data.

- Data Dictionary: Define each variable and its possible values.

- Collection Methods: Explain how the data was gathered and processed.

- Usage Guidelines: Provide instructions for accessing and using the dataset.

Step 10: Test and Iterate

Finally, test your dataset by using it to train a prototype AI model. Evaluate the model's performance and identify any gaps or issues in the data. Iterate on your dataset by:

- Collecting additional data to address gaps.

- Refining preprocessing steps to improve quality.

- Expanding the dataset to cover new scenarios or edge cases.

Building Plain Old Data from scratch is a meticulous but rewarding process. By following these steps, you can create a clean, structured, and reliable dataset that forms the backbone of your AI systems. Remember, the quality of your data directly impacts the success of your AI initiatives—so invest the time and effort to get it right.

Start building your Plain Old Data today, and unlock the full potential of AI for your business or project!

Helpful Resources

- Data.gov - US Government Open Data

- European Union Open Data Portal

- Kaggle Datasets - Public data sets for machine learning

- Scikit-learn preprocessing documentation - Guide on data pre-processing.

- Pandas Documentation - Python data analysis library